Good Monday Morning

It’s October 30th. Happy Halloween tomorrow with a comforting note: researchers cannot find any instances of children ever being seriously harmed or killed by doctored trick-or-treat candy.

Today’s Spotlight is 839 words — about 3 minutes to read.

Headlines to Know

- California authorities suspended Cruise’s robotaxi permits following an incident involving one of its driverless vehicles.

- Elon Musk boorishly trolled Wikipedia during its annual fundraising campaign by offering to make a $1 billion donation if the company changed the service’s name to Dickipedia. Separately, Fidelity further wrote down the value of its holdings in Musk’s X and has now devalued the original investment by 65% in under 1 year.

- Google announced eight measures to bolster daily task accessibility, with significant emphasis on voice control and customizable settings for individual functional needs.

Spotlight on Meta Sued Over Kids’ Health

Forty-one states and the District of Columbia filed suit against Meta, alleging that the mental health of children is harmed by addictive features such as harvesting personal data about children and creating tactics designed to keep them online longer that are built into the systems.

This legal challenge highlights the culmination of growing concerns surrounding Meta’s child-centric offerings like Messenger Kids and the proposed Instagram for Kids, programs that drew strong opposition from psychologists and other experts, who urged Meta to abandon them.

Documents provided by corporate whistleblower Frances Haugen in 2021 led to bipartisan outrage in Congress and days of headlines, illuminating Meta’s internal deliberations on their findings regarding children’s mental health impacts. The scrutiny, intensified by Haugen’s revelations and the planned services for children under the age of 13, eventually derailed those plans.

One damning internal slide read, “We make body image issues worse for one in three teen girls.”

As this blockbuster case unfolds over the coming months, Meta is sure to face withering criticism over more than 5 years of negative headlines related to privacy and child safety. Repercussions or scrutiny will also undoubtedly extend to other popular networks among children, including YouTube, Snap, and TikTok. T

Practical AI

Quotable: “There it was, John’s voice, crystal clear. It’s quite emotional. And we all play on it, it’s a genuine Beatles recording.”

— Paul McCartney, in a statement describing “Now and Then”, a song to be released this week after AI correction allowed producers to use long obscured vocals.

Google Bets On Anthropic: Following Microsoft’s $10 billion investment in ChatGPT maker Open AI, Google announced a $2 billion investment in competitor Anthropic.

Tool of the Week: Canva’s new Magic Studio does all sorts of nifty tricks, including translation, repurposing past creative, text-to-video, and lasso-style tools.

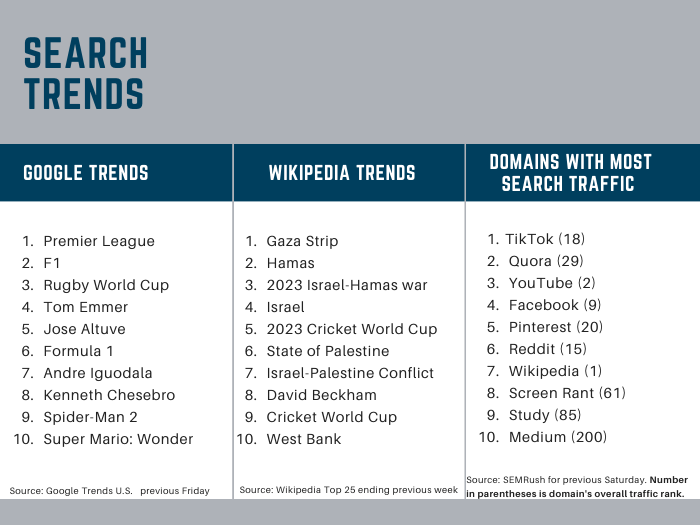

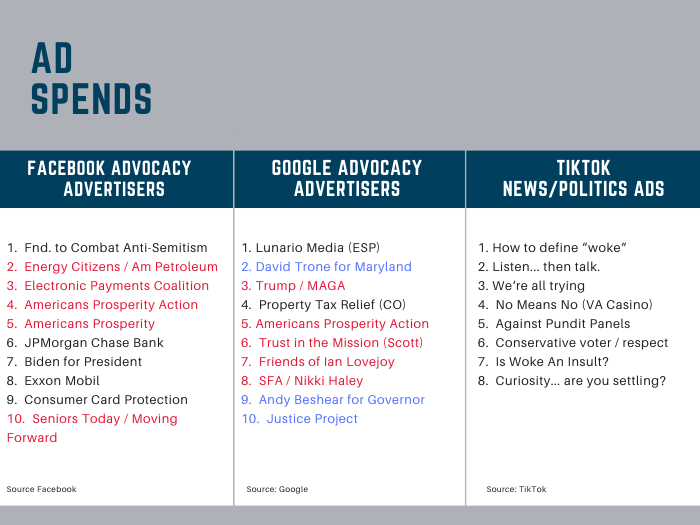

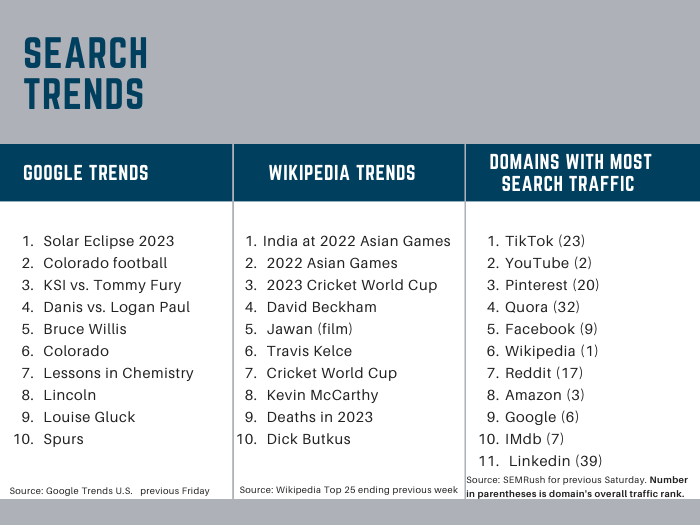

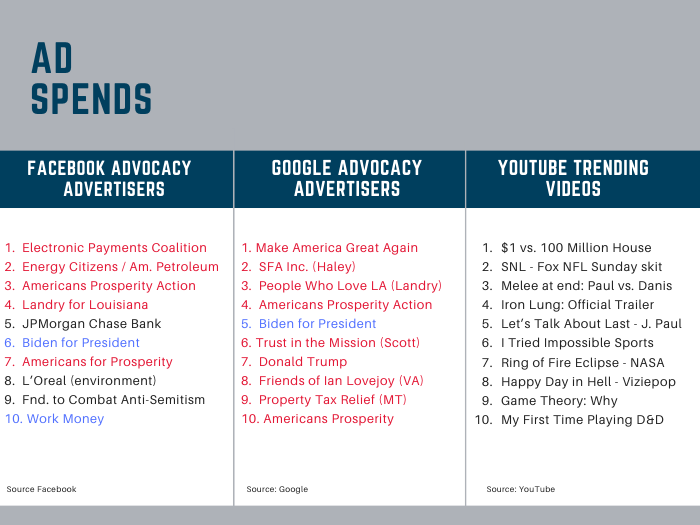

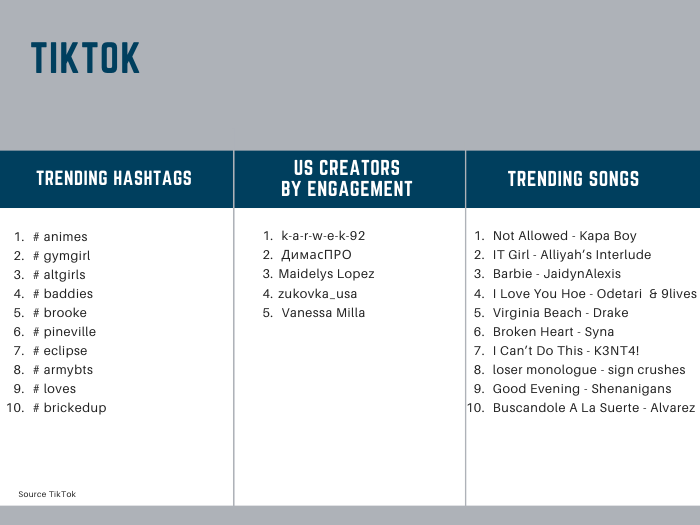

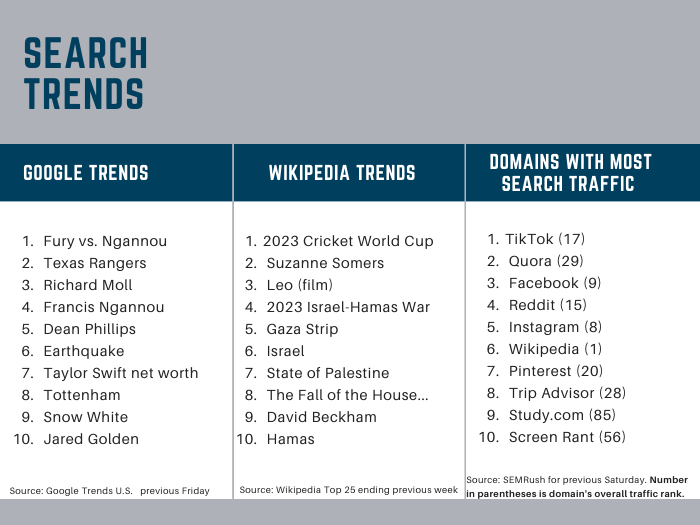

Trends & Spends

Did That Really Happen — People Are Not Flying to Austria Instead of Australia

A funny viral meme claimed that a special counter at Salzburg, Austria’s airport reroutes 100 passengers each year who meant to fly to Australia. It may well happen, but the Washington Post confirmed the numbers are not tracked nor is there such a counter.

Following Up — MGM’s Costly Hack

MGM Resorts said that the computer intrusion it suffered after a help desk employee inadvertently allowed a non-employee network access will cost the company $100 million in lost profit.

Protip — Going Off Grid with a Phone

The Markup has created an easy-to-use guide that shows how to use a cell phone and remain off-grid. Caveat: it’s not just burner phones or one time so you have to seriously want to do this. But it’s a great thought experiment read too.

Screening Room — De Niro & Butterfield for Uber One

Science Fiction World — Space Pollution Fine

The FCC has fined Dish Network $150,000 for failing to move its now defunct EchoStar-7 satellite away from other operational satellites. It’s the first time that the agency has enforced regulatory authority in space.

Coffee Break — The Headline Clock

Check out this whimsical clock where each new minute is displayed using clickable headlines from today’s news stories in a playful blend of words and numbers.

Sign of the Times