It’s January 9th. A GoFundMe campaign launched two years ago by Damar Hamlin to fundraise for his community ballooned to nearly $9 million following his dramatic on-field cardiac arrest. Hamlin’s contract, like most in the NFL, is not guaranteed.

Spotlight is off next week to observe Rev. Martin Luther King, Jr. Day.Today’s Spotlight is 866 words — about 3minutes to read.

Spotlight On … LastPass

The LastPass hack we told you about last August was more severe than originally claimed, and some cybersecurity experts say that the company is improperly downplaying the risk.

LastPass now acknowledges that it was hacked in August and November. In late August, it reported that its code had been stolen, but that no customer action was required.

Another breach occurred in November. One month later, LastPass acknowledged the November hack used information from the August hack, but said “the majority of customers” still didn’t have to do anything. because customers who followed their “suggested” best practices would be fine.

Multiple security researchers disagree, stating that hackers can build profiles of individuals using LastPass user data and that passwords used on the service were not as secure as random passwords would be. Further, LastPass was not requiring passwords to follow the company’s password strength recommendations.

We stopped recommending and using LastPass in 2019 after its third security breach in four years. It was apparent that the company, acquired in 2015, was in trouble. The company acknowledged two subsequent vulnerabilities, one each in 2020 and 2021.

Researchers and competitors also question LastPass’ claim that guessing a master password would take millions of years. An 11-character password can be guessed in about 25 minutes if humans don’t use random characters and only use familiar words and acronyms, claims 1Password executive Jeffrey Goldberg.

Other voices:

“I would consider all those managed passwords compromised,” the NYT quotes one researcher as saying.

A senior engineer on Yahoo’s security team told Wired, “I used to support LastPass. I recommended it for years and defended it publicly in the media … But things change.”

Your next steps: Get a free BitWarden account or 1Password paid account. Your password manager should select and store your passwords. Use two-factor authentication everywhere possible in addition to a randomly generated password. Passkeys are coming, but they won’t available on every service and with every device.

Interested in passkeys? See our November 21 Spotlight.

3 More Stories to Know

1) Anker’s Eufy device unit continues to fight exposés in The Verge about misrepresentations regarding camera data. The Verge claims that Eufy deleted ten privacy promises on its website instead of answering questions about how security camera footage is stored.

2) German government officials met with Twitter owner Elon Musk last week regarding Twitter’s previous commitment to remove disinformation from its site. Twitter and other Big Tech companies must do so by mid-June to comply with new laws.

3) Big Tech companies continue to face action from regulatory authorities.

Epic Games – the Fortnite game developer agreed to pay $520 million in December to settle FTC complaints about child privacy requirements and tricking players into paying for upgrades.

Meta – agreed to pay $725 million to settle a lawsuit regarding illegal data sharing with Cambridge Analytica. Separately, Meta was fined $414 million by European regulators last week for allowing ads based upon user activity.

Amazon – avoided fines, but must comply with a seven year agreement governing how it interacts with third party sellers on the site to settle EU antitrust complaints.

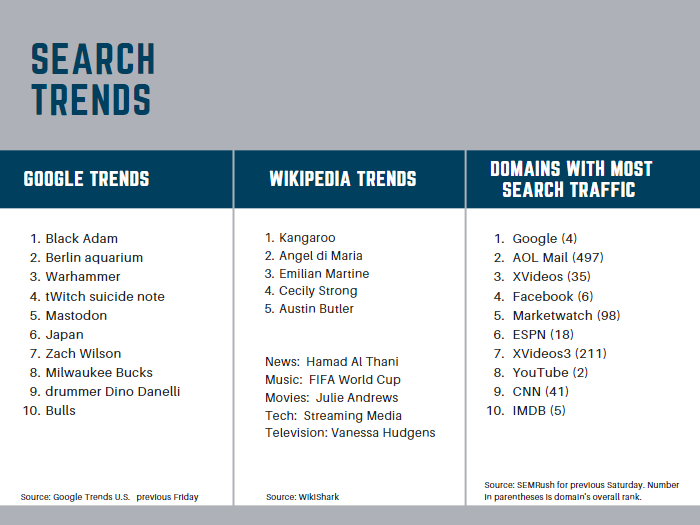

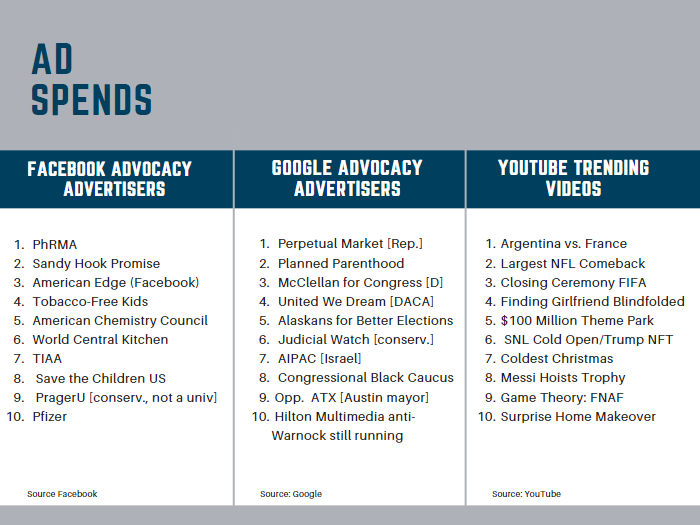

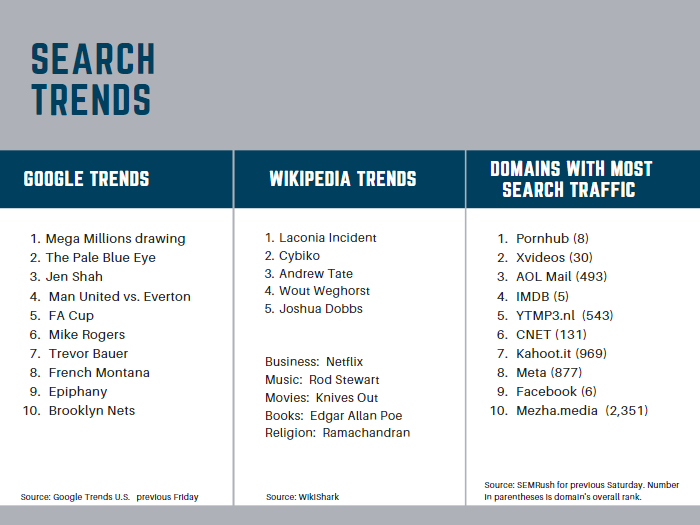

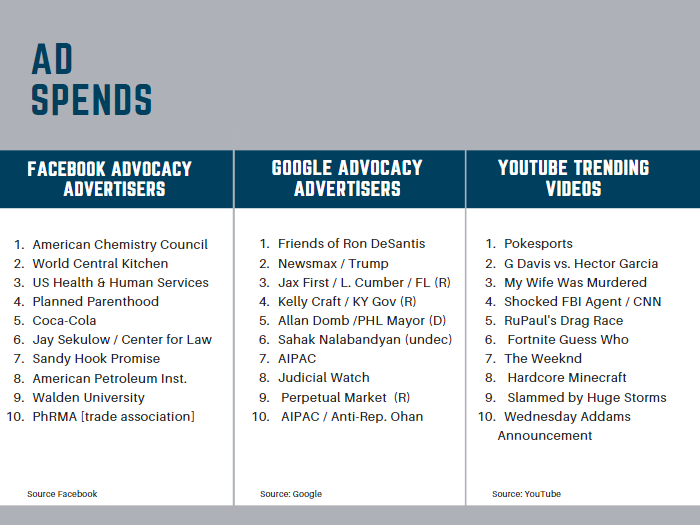

Trends & Spends

Did That Really Happen? — Orange Dots on iPhones

An orange dot showing on the top right of an iPhone display indicates that a running application has microphone access, not that “someone is listening to you right now” as a viral post claims. Snopes explains the alarmist take on a nice privacy feature.

Following Up — ChatGPT and Bing

We wrote about ChatGPT before the holidays and have been having a blast testing it since then. Now there’s word that Microsoft is preparing to enhance its Bing search engine with the technology. Three years ago, Microsoft invested $1 billion in Open AI, the organization that created ChatGPT.

Protip — How to Use 1Password

The best primer on how to start using 1Password was published by the New York Times last summer. There are screenshots, how-tos, and tips.

Screening Room — France’s Loto

Science Fiction World — Fast Food Automat

McDonald’s is testing a restaurant where ordering is done via kiosk or online and the food is served via robotics. The concept is only at one location near Fort Worth for now, and food continues to be prepared by humans.

Coffee Break — Chrono Quest

Chrono Quest gives players three tries to place six historical events in chronological order. Like Wordle, everyone gets the same quiz, and unlike Wordle, streaks continue if you miss a day.

Sign of the Times