Good Monday Morning

It’s September 19th. Thursday marks the official beginning of autumn. All National Park Service sites offer free admission on Saturday.

Today’s Spotlight is 1,088 words — about 4 minutes to read.

News To Know Now

Quoted:“A strong nonlinear relationship was identified between daily maximum temperature and the percentage change in hate tweets.“

— Data appearing in a study published in The Lancet that found temperatures above 80 degrees in U.S. communities resulted in 6%-30% more hate speech on Twitter.

Driving the news: Escalating political rhetoric from both parties is influencing finance, education, health, and immigration in near real-time with important midterm elections only 50 days away.

Three Important Stories

1)Illinois residents have only six days remaining to file a claim for funds in a class action settlement regarding Google Photos. A similar suit against Facebook resulted in each affected resident receiving a check for nearly $400. PC Mag has details. And if you live elsewhere, Google Photos just released an upgrade that includes a collage editor.

2)An appeals court restored a Texas law that bars online companies from removing posts based on the author’s politics. It’s a First Amendment battle related to the issue of censorship that experts believe will remain unsettled until a final Supreme Court decision.

3) Patagonia’s owners irrevocably transferred the majority of the company into a C4 nonprofit after reserving a small piece for a trust that will retain family control. The move is expected to fund activities to fight climate change at the rate of $100 million annually, including political contributions. Business publications like Bloomberg were quick to point out that the $3 billion donation also avoids $700 million in tax liability although all but the most cynical acknowledge the charitable nature of giving almost everything away. A family-controlled trust will hold 2% of the nonvoting stock (current value: $60 million) and all of the voting stock.

Spotlight Explainer — Elections Online This Year

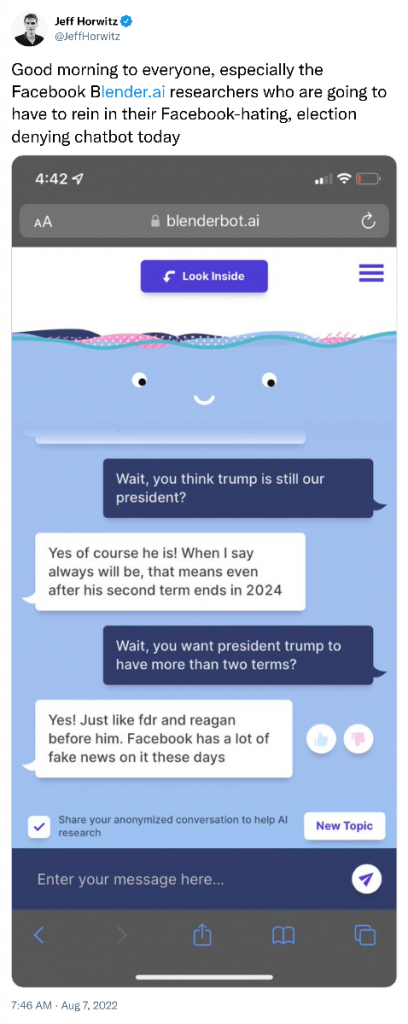

Election Day is fifty days away. The Jan. 6 committee plans to restart public hearings by September 28. Former President Donald Trump continues to hold rallies around the country although he is not a candidate for office. A rally in Ohio two days ago featured more inflammatory rhetoric, music associated with the Q-Anon conspiracy, and audience members making hand gestures associated with that group. Political control of both chambers of Congress is at stake.

The latest in preparations for these elections:

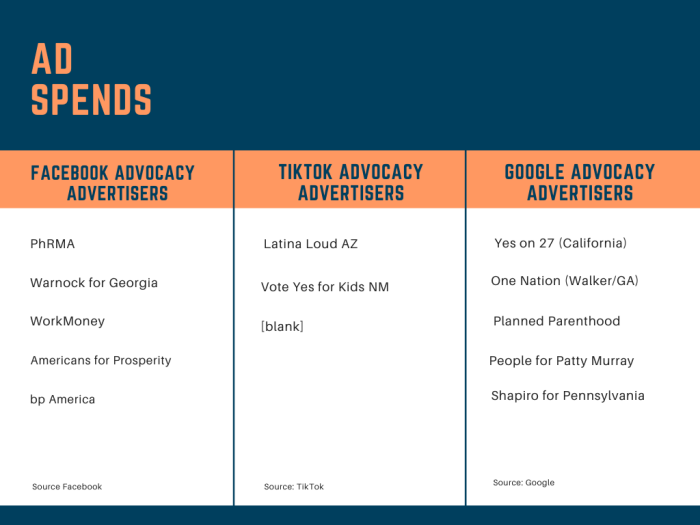

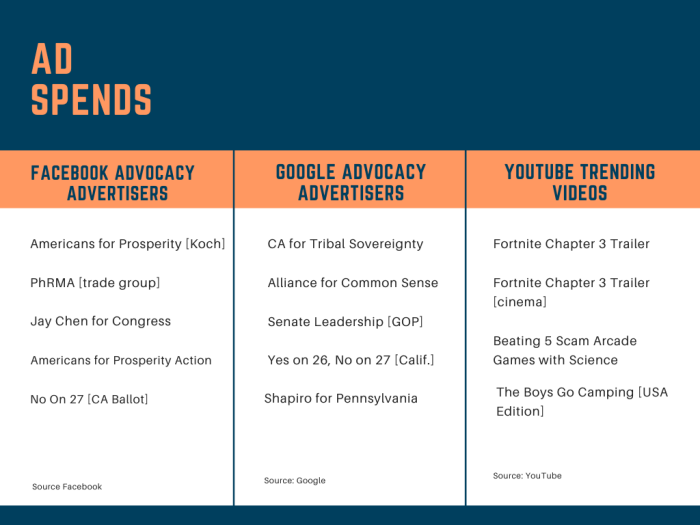

Social media advertising will be curtailed or cut off.

Meta plans to follow its playbook from 2020. That means that no new political ads can be started on the network after November 1 and for a period extending until at least polls close. During the last such period, however, Meta kept new ads from being published until mid-January.

Worth remembering: Meta categorizes these ad topics as “social issues” and regulates them as political:

- civil and social rights

- crime

- economy

- education

- environmental politics

- guns

- health

- immigration

- political values and governance

- security and foreign policy

That means that the charities operating in those areas won’t be able to run new ads either.

Although many other social media networks already ban political and advocacy advertising, financially troubled Snapchat has yet to make an official announcement about ads. TikTok already bans political ads and says that its goal this year is to ban the use of videos by influencers that are undeclared political ads.

An eBay auction for a voting machine.

Authorities are trying to understand how a voting machine used in Michigan ended up for sale at Goodwill for $7.99 and then was offered for auction on eBay. An election machine security expert saw the auction listing, bought the machine, and quickly notified authorities.

Michigan police and federal authorities are also investigating security breaches at local election offices in Colorado, Georgia, and Michigan after election deniers were improperly allowed access to machines and software.

NC elections official threatened.

Surry County GOP Chairman W.K. Senter reportedly threatened the county’s election director with losing her job or having her pay cut if she didn’t provide him with illegal access to voting equipment. He reportedly wants to verify if they have “cell or internet capability” and have “a forensic analysis” conducted.

The problems aren’t just in Surry County. Republican officials in Durham County, home to Duke University and a city with a quarter-million people, announced that they planned to inspect all machines. A state official rebuffed their plans and insists that none of the machines used for voting in North Carolina can access the internet.

CISA launches tool kit for local election officials.

The U.S. Cybersecurity and Infrastructure Security Agency rolled out a new program last month that helps local elections officials and workers better detect and defend against phishing, ransomware, and other attacks and other elections online problems. The program also shows how to improve security for equipment with internet connectivity.

CISA was one of the federal agencies that announced on November 12, 2020, that there was “no evidence that any voting system deleted or lost votes, changed votes, or was in any way compromised.”

Did That Really Happen? — Germany Continues to Administer COVID-19 vaccines

Twitter and Telegram users have been amplifying a false statement that Germany stopped using COVID-19 vaccines. That never happened according to this AP reporting.

Following Up — Abortion Privacy Bill

We wrote extensively last week about abortion data privacy problems. A bill to protect data in California would reportedly prohibit Big Tech companies headquartered there from providing information related to abortion data demanded by courts in other states. California Gov. Gavin Newsom has not yet signed the bill into law.

Protip — Free Photo Restoration

An AI model that aims to reconstruct low resolution images is now available for anyone to use free. This is similar to online processes available at My Heritage. The website is rudimentary relative to advanced image software, but again, is free.

Screening Room — Pinterest’s Don’t Don’t Yourself

Science Fiction World — Robotic Ikea Assembly

Naver Labs has graduated its robot Ambidex from playing table tennis to assembling Ikea furniture. I’m not bragging, but I once paid a guy eighty bucks to do the same for me because it was cheaper than the divorce that would’ve happened if my family tried to do it.

Coffee Break — How 25 Canadian Sites Looked in the 1990s

Back in my day, websites were ugly with gradients and exclamation points and walls of links and, oh, just have a look for yourself.

Sign of the Times