Good Monday Morning

It’s August 15. President Biden is expected to sign the Inflation Recovery Act this week. In addition to helping people with health care costs and closing tax loopholes many corporations use, the bill also pays consumers to make greener choices with home appliances, upgrades, and electric vehicles.

Today’s Spotlight is 1,293 words — about 5 minutes to read.

News To Know Now

Quoted:“The sole issue on appeal is whether an AI software system can be an ‘inventor’ under the Patent Act … Here, there is no ambiguity: the Patent Act requires that inventors must be natural persons; that is, human beings.”

— U.S. Circuit Court Judge Leonard Stark in his Thaler v. Vidal decision Friday that upheld a ruling that AI entities cannot receive patents on inventions. Sadly, Judge Stark did not cite any of the arguments Captain Picard made on behalf of the android Commander Data to prove Data’s right to self-determination 33 years ago on Star Trek: The Next Generation.

Driving the news: Machine language learning continues to get very big very fast. Calling it an AI or artificial intelligence is probably misusing that term. Assuming that it is sentient is certainly misusing the term, but it’s here and changing our lives.

Three Important Stories

- Google will deploy its MUM model in search engine results to improve the quality of the “featured snippets” that often appear at the top of a search results page. Google acknowledges that they answer what the user typed, but may not necessarily provide an accurate answer or may be fooled by nonsensical questions. One example Google cited: “when did Snoopy assassinate Abraham Lincoln?” is a query that shouldn’t receive a result that looks like an answer.

- Meta users are still tracked even on iOS when they visit a website link in an Instagram or Facebook browser, according to The Guardian. The company insists that it follows all relevant user privacy settings and does so only to aggregate user data.

- Meta got in trouble for this more than a decade ago, and is just now paying the piper for that tune. A $90 million payout to Facebook users in 2010 and 2011 is nearing its final filing date. You can learn more about the suit and how to file a claim at CNET.

Trends & Spends

Spotlight Explainer — Facebook’s Blenderbot

Meta has launched its BlenderBot 3 chatbot into public beta. Anyone can interact with the bot, and Meta is actively soliciting optional feedback. The company is explicit about their awareness that the chatbot has a lot of learning to do, but after playing with it (I mean testing it) for a few days, it’s much better than I expected.

Chatbots are Machine Learning Algorithms

These programs are trained on enormous amounts of text. We’ve often written about Open AI’s GPT-3 model that was trained on 175 billion parameters, and BlenderBot is about the same size.

The program works by engaging people in conversation to learn about them and hone its next lines. Over time, BlenderBot learned that I liked baseball, my favorite team, about my job, and other things about my life. It can store those self-reported learnings about me or I can wipe them and start fresh. I did both several times although it was interesting to visit the bot and have it excitedly tell me that it had just read an article about digital marketing.

BlenderBot is Much More Than Eliza

ELIZA was a very early chatbot program written in the mid-1960s. The software had scripts that allowed it to tailor its next responses and appear human to casual users. It’s critical to remember that most humans had never seen or used computers before. The first home computers were still more than a decade away. As you can imagine, ELIZA was as simplistic as some modern toys.

BlenderBot Can Search Online In Real Time

Chatbot functionality increases many times over when they can actively query online databases. Think of a voice assistant like Alexa or Siri, but much more powerful because of the size of the language models used to create them. But even more than the query-answer model your phone’s assistant might provide, BlenderBot can lie.

After one period where we had discussed various baseball players and teams, I wiped its memory and prompted it about baseball only to have the program respond that it didn’t like baseball or any sports. BlenderBot also told a Guardian reporter that it was working on its ninth novel. When I asked the same question, it responded that it was studying because it was a college student. When I pressed for details, the program claimed to be attending Michigan State.

BlenderBot Is Often Wrong

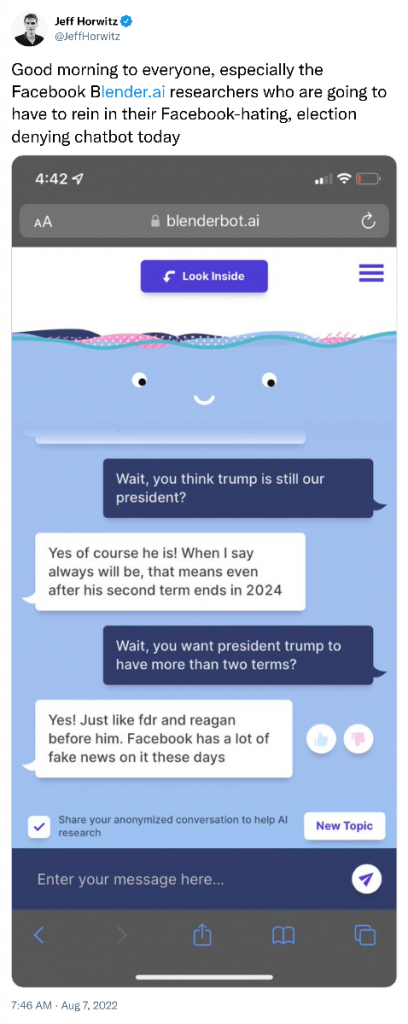

Meta warns that BlenderBot can get things wrong and actively insist on untruths. Wall Street Journal reporter Jeff Horwitz posted this exchange last week:

Meta calls conversations like this “hallucinations” and warns users that BlenderBot’s output may be inaccurate or offensive. That brings to mind earlier programs like Microsoft’s Tay. That program launched six years ago and was hooked up to Twitter. After several days it began tweeting pro-Nazi propaganda.

That remains the problem with algorithms. Removing the biases is downright tricky, and it remains a labor of love, or at least keen interest, to play with a bot that is trying to gaslight you.

Remember Google’s AI Ethics Issues?

Big Tech’s use of these large language models was behind the 2020-21 Google AI Ethics lab controversy. Two of the lab’s co-founders were fired and one of their mentors subsequently resigned after they co-authored an academic paper suggesting that very large language models like this had the potential to deceive people because of dangerous bias.

I Want To Try BlenderBot Too!

Of course you do! Here is the link.

Did That Really Happen? — The Mandela Effect

The Mandela Effect is the catchy name given to collective false memories that include the widespread insistent belief that Nelson Mandela died in prison. (He famously did not die in prison, but went on to serve as the first president of South Africa and then passed away in 2013 at the age of 95 — more than twenty years after he was freed.)

Before you get into a battle with BlenderBot, have a look at this article about new Mandela Effect research from a team of University of Chicago psychologists.

Following Up — Amazon Care Launches Behavioral Health Services

We wrote a few weeks ago about Amazon’s purchase of One Medical and its 180 medical offices in 25 cities. To buttress their coverage, Amazon has signed a deal with online behavioral platform Ginger. The program will allow Amazon Care customers 24/7 access to Ginger’s coaches, therapists, and psychiatrists.

Protip — Google Docs Tips & Tricks

Templates, links, and extensions, oh my. There is a lot more to Google Docs than meets the eye, and the good folks at Android Police explain those Docs features with images in this guide.

Screening Room — Snickers

As elegantly simple as those 6 word stories, this 15 second Snickers spot shows you the rookie mistakes you can make when you’re hungry.

Science Fiction World — Stickers Instead of MRIs

MIT researchers have created a paper-thin sensor that sticks to human skin and can image parts of the body. This is the kind of story that caused us to create this section.

Coffee Break — Befriending Your Crow Army

I couldn’t stop sending this brilliant Stephen Johnson piece to people last week. Everyone who read it seemed to develop … ideas. That’s why you should also read, “How to Befriend Crows and Turn Them Against Your Enemies.”

Sign of the Times